A year before the French presidential election, the Secretary of State “En Marche” in charge of the Social, Solidarity and Responsible Economy, Olivia Grégoire, worries in the french magazine L’Express 1 “Olivia Grégoire: “On n’échappera pas aux deepfakes en 2022”, L’Express, April 10, 2021 about the influence of deepfakes on the 2022 election. By seizing the buzzword in fashion, the former head of a consulting firm for SMEs waves the red rag to scare people and misses the point

❋

Synthetic media have been a concern for politicians for around three years. In the United States, a few months after the first article on deepfakes was published 2 “AI-Assisted Fake Porn Is Here and We’re All Fucked,” Motherboard, Vice, Cole Samantha, December 11, 2017 it was a video in which American actor Jordan Peele manipulates a digital puppet of Barack Obama 3 You Won’t Believe What Obama Says in This Video! 😉, Buzzfeed Video, Peele Jordan, April 17, 2018 that set the world alight. The deepfakes, until then confined to the sphere of amateur porn and video mashup. 4 as a reminder, “the mashup is above all a state of mind. Intimately linked to the very nature of the Internet, to its history, its uses, it is a pillar of the participative web. However, it inherits from a much older collage practice, coming from the cinematographic avant-garde and Found Footage. It is therefore an artistic expression that calls for recycling, the use of audiovisual materials produced by others. “This is a form of artistic expression that summons up the recycling and reuse of audiovisual materials produced by others. .

The video footage shows its potential and attracts the attention of political observers worn out by 4 years of the Trump presidency. The disaster scenario is taking shape before their eyes. The founding narrative of deepfakes is built around this hypothetical new threat to democracy. The Trumpian context in the United States participates in this rise of the discourse in the spheres of power (media and politics), after all, the dystopia that some democrats feared is unfolding its whole plan in broad daylight.

So in just a few days, the video that made the rounds on the web and continues to make its mark on Uncle Sam and abroad. It remains, to this day, the most cited in articles about the influence of deepfakes on politics (second place probably going to Nancy Pelosi’s shallow-fake). It is her that Olivia Grégoire quotes in her interview, it is this video that seems to have triggered the reflection of the Secretary of State. But, unluckily, the time has taken its toll and we now know that yesterday’s fears will have a hard time being realized. Why?

The technology is not mature enough

Contrary to Olivia Grégoire’s assertions, it is too early to be afraid of deepfakes. If synthetic media raise a certain number of ethical, philosophical or practical questions that will have to be resolved sooner or later, they do not constitute a credible tool of manipulation capable of destabilizing democracies.

First pitfall, the technology is not yet mature enough. It is not yet possible to generate convincing deepfakes with a magic mouse click. The most sophisticated deepfakes, the ones that legitimately provoked doubt in the readers, required the work of a remarkable actor, then that we train a GAN 5 an artificial neural network, an algorithm, in short, involved in the conception of deepfakes for months and that finally, we retouch by hand entire video segments to hide at best the seams of the digital graft operated 6“Chris Umé:the @deeptomcruise deepfake is made to alert the public,” March 5, 2021 .

Audio remains the main pitfall on which realism fails. If the progress in synthetic voice reconstruction is quite spectacular 7 “[AI Voice] Dating Tips from Kylo Ren” – the synthetic voice of American actor Adam Driver, Speaking of AI, February 14, 2021 , the human ear still detects imperfections, differences in tone of pitch and rhythm of the synthetic voices reinforcing this strange feeling that one is in the presence of a fake 8 this is what is called, the notion of ” Uncanny Valley”, invented in the 1970s by the roboticist Masahiro Mori, designates the fact that when an object reaches a certain degree of anthropomorphic resemblance appears a feeling of anguish and uneasiness. ” Petit détour par la vallée de l’étrange “, CNRS le journal, February 16, 2016.

Nancy Pelosi’s shallow fake is a video manipulated using traditional methods, not artificial neural networks

Since the best is the enemy of the good, it will be objected that it is enough to release crudely manipulated content to do the job and fool hundreds of thousands of voters. Here again, Olivia Gregoire’s statement contrasts with reality. Another video to have widely circulated and galvanized the strongest fears about political manipulation, Nancy Pelosi’s shallow fake (also cited by the interviewee) 9 “Real v fake: debunking the ‘drunk’ Nancy Pelosi footage-video,” The Guardian, May 24, 2019 , was actually just a slowed-down video. Here we leave the realm of deepfake to return to a much more traditional realm of manipulation, carried out with the help of mainstream software. There is no need to invoke neural networks, artificial intelligence, or synthetic media. Human intelligence and elbow grease are enough to make these video loops of a few seconds. The question is what impact they have.

The sounding board

In this developing narrative, it is worth making an aside and noting that the press treatment of the deepfakes issue does not do justice to the complexity of the subject. It is generally based on four main angles: the creation of a deepfake is within everyone’s reach 10the reality is more subtle, it certainly doesn’t take an army of special effects creators anymore to make a deepfake, but the task still requires a lot of knowledge, techniques, and solid equipment deepfakes deceive the public and undermine the common ground of reality, the potential political manipulations through deepfakes endanger democracy and – in a less prevalent way – deepfakes allow the creation of non-consensual pornographic videos (so-called revenge porn for example). 11 2020) Politics and porn: how news media characterizes problems presented by deepfakes, Critical Studies in Media Communication, 37: 5 , 497-511 , DOI: 10. 1080/15295036.2020.1832697 To this, we can add a more technical subcategory devoted to the detection of deepfakes and to methods, tips, and tricks to identify synthetic media.

This type of treatment leads de facto to a simplification of the context of the appearance of deepfakes, caricatures the motivations of the creators, exaggerates the real or supposed dangers, and ends up conveying a relatively anxiogenic point of view compared to the reality of the threat 12since February 2018, I have been able to constitute a bibliographic corpus gathering 680 entries, articles, studies, reports describing the phenomenon of deepfakes, very few of them approaching, for example, positive aspects linked with the development of synthetic media.. In this, Olivia Grégoire’s interview does not help to analyze positively the questions raised by deepfakes.

By observing the publications since 2018, we can see that clear phases can be observed in the thematic treatment. It was the deep-porns involving celebrities that brought the phenomenon to the attention of the general public. Then the perspective of the American elections raised the first wave of questions about democratic issues (this is where Jordan Peele’s video appears). The publication of a report on the inflation of deep-porn videos brought back the debate on the issue of women victims of these videos (this time with a stronger focus on women who are not celebrities). Then the focus shifted back to security issues (often in a political framework, but increasingly also in an economic one) with the appearance of various espionage cases 13” Oliver Taylor, the model student and pure fiction“, July 28, 2020.

For each of these waves, the question of countermeasures, prevention, detection, and legislative provisions arose. The media narrative built around the spread of synthetic media in the digital cultural space has very rarely explored the very reasons for the existence of deepfakes: what technologies allow these manipulations, what companies are behind these technologies, what phenomenon allows the multiplication of pornographic content on the web, what is the market for online pornography, is political advertising desirable, etc., etc. Nor has he explored the ethical questions raised by the technical solutionism put forward to regulate the existence of deepfakes: digital tracing of the content created (via blockchain or NFTs?), of its distribution, of its consumption, restrictions on the types of digital content that can be created, temporal restrictions on the distribution of this content, all of which are prejudicial to individual freedoms and to freedom of expression and artistic freedom. The best would be at least to initiate the debate.

A relative impact?

Is the production and broadcasting of manipulated content enough to manipulate the crowds? Can voters change their vote after discovering a political deepfake? A number of research studies show the effects of synthetic media on the human psyche and question precisely their impact on political decisions. These studies, which are still relatively scattered, do suggest a long-term impact on the individual, but it is very limited and not of a nature to upset a vote.

A first study concerning political behavior 14 Dobber, Tom, Nadia Metoui, Damian Trilling, Natali Helberger, and Claes de Vreese. “Do (Microtargeted) Deepfakes Have Real Effects on Political Attitudes?” The International Journal of Press/Politics 26, no. 1 (January 2021): 69-91. Do (Microtargeted) Deepfakes Have Real Effects on Political Attitudes? org/10.1177/1940161220944364. , indicates, for example, that in some specific cases of microtargeting deepfakes can influence how one perceives politicians who are victims of deepfakes and even the parties to which they belong. Another study conducted in Great Britain shows that deepfakes develop in viewers a kind of cynical distrust of online information content where a certain credulity was expected 15 Vaccari, Cristian, and Andrew Chadwick. “Deepfakes and Disinformation: Exploring the Impact of Synthetic Political Video on Deception, Uncertainty, and Trust in News.” Social Media + Society (January 2020). https://doi.org/10.1177/2056305120903408.) .

To these modest results, we must add that the deepfakes produced are only a drop in the ocean of content that is created every day on the internet. When the latest Sensity report points to an exponential explosion in the number of deepfakes, bringing their number to more than 90,000, one must realize that 90% of them are deep-porns, that among the remaining 10% are many experimental deepfakes, deepfakes made by amateurs and that only 1% of these synthetic videos are ultra-realistic creations capable of eventually challenging everyone’s critical sense.

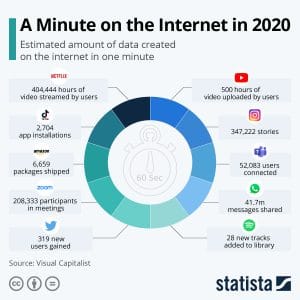

An estimate of the volume of data created per minute in 2020 – Statista

It is also worth remembering that every minute, 500 hours of video content are uploaded to YouTube. On Instagram, 95 million photos and videos are shared daily. Every day, humanity produces 2.5 quintillion bits of data 16” How Much Data Is Created Every Day in 2020?“, March 18, 2021. It is in this already crowded universe, where the attention of Internet users is becoming a strategic issue, where solicitations come more and more from our physical (with connected objects) and social digital environments, where traditional media continue to play a relatively important role, that deepfakes are hatching.

The influence of deepfakes seems to be still relative, and statements predicting the end of democracy should be taken with great care.

There is no doubt that some actors will try to exploit the potential of synthetic media to disrupt to their advantage the precarious balance in which our democracies find themselves. Some of them may even achieve some minor successes in the next few years, but we should not expect an immediate revolution.

So what’s the problem? No problem?

We have to understand that deepfakes are with us and they won’t go away.

Gradually, synthetic media will percolate from the barely frequented bangs of the internet to more mainstream industries. Already, Hollywood studios are exploring its potential in film creations. There are other possibilities, in information, translation, autonomous vehicles, medicine.

A number of countries around the world have passed new legislation regarding the use of deepfakes 17in Germany, deepfakes fall under common law. In the United States, some states have passed specific legislation such as California or Texas. Bill S. 3805 was introduced in the Senate on December 21, 2018, to regulate deepfakes at the federal level. Other countries such as New Zealand, Korea, or England have passed legislation or made bills in this sense as well. Some of them deal with the publication of manipulated videos in an electoral context and define ban periods. Others deal with the issue of pornography and the protection of victims of revenge porn. The legislator reacts to an emergency that does not exist and forgets to think about the future of these technologies, which are inevitable at the moment.

Because to discuss these technologies 18and this is not reserved to synthetic media is also to ask the question of their usefulness, of their necessary existence in the public sphere, or of their emptiness. One could quite forbid deepfakes. A country, through its legislative body, could quite consider that synthetic media pose more problems than they bring solutions and ban them entirely. This choice is political, it is not a matter of simple fatality.

To conclude. The legal arsenal in France largely covers the whole of the prejudices which can be caused by the malicious use of synthetic videos. Defamation, usurpation of identity, image rights, and even contempt of a person in authority can be applied in most cases. The challenge does not lie in the qualification of a possible infraction. The challenge is to find the author of the deepfake that has caused damage. In this respect, the situation is no different from cases of fraud, trafficking in child pornography images, or other similar offenses. It takes resources to track down the perpetrators and dismantle the networks. This means that only a government can allocate. Would Olivia Grégoire grant consequent means to advance on this subject?

Notes :

| ↑1 | “Olivia Grégoire: “On n’échappera pas aux deepfakes en 2022”, L’Express, April 10, 2021 |

|---|---|

| ↑2 | “AI-Assisted Fake Porn Is Here and We’re All Fucked,” Motherboard, Vice, Cole Samantha, December 11, 2017 |

| ↑3 | You Won’t Believe What Obama Says in This Video! 😉, Buzzfeed Video, Peele Jordan, April 17, 2018 |

| ↑4 | as a reminder, “the mashup is above all a state of mind. Intimately linked to the very nature of the Internet, to its history, its uses, it is a pillar of the participative web. However, it inherits from a much older collage practice, coming from the cinematographic avant-garde and Found Footage. It is therefore an artistic expression that calls for recycling, the use of audiovisual materials produced by others. “This is a form of artistic expression that summons up the recycling and reuse of audiovisual materials produced by others. |

| ↑5 | an artificial neural network, an algorithm, in short, involved in the conception of deepfakes |

| ↑6 | “Chris Umé:the @deeptomcruise deepfake is made to alert the public,” March 5, 2021 |

| ↑7 | “[AI Voice] Dating Tips from Kylo Ren” – the synthetic voice of American actor Adam Driver, Speaking of AI, February 14, 2021 |

| ↑8 | this is what is called, the notion of ” Uncanny Valley”, invented in the 1970s by the roboticist Masahiro Mori, designates the fact that when an object reaches a certain degree of anthropomorphic resemblance appears a feeling of anguish and uneasiness. ” Petit détour par la vallée de l’étrange “, CNRS le journal, February 16, 2016 |

| ↑9 | “Real v fake: debunking the ‘drunk’ Nancy Pelosi footage-video,” The Guardian, May 24, 2019 |

| ↑10 | the reality is more subtle, it certainly doesn’t take an army of special effects creators anymore to make a deepfake, but the task still requires a lot of knowledge, techniques, and solid equipment |

| ↑11 | 2020) Politics and porn: how news media characterizes problems presented by deepfakes, Critical Studies in Media Communication, 37: 5 , 497-511 , DOI: 10. 1080/15295036.2020.1832697 |

| ↑12 | since February 2018, I have been able to constitute a bibliographic corpus gathering 680 entries, articles, studies, reports describing the phenomenon of deepfakes, very few of them approaching, for example, positive aspects linked with the development of synthetic media. |

| ↑13 | ” Oliver Taylor, the model student and pure fiction“, July 28, 2020 |

| ↑14 | Dobber, Tom, Nadia Metoui, Damian Trilling, Natali Helberger, and Claes de Vreese. “Do (Microtargeted) Deepfakes Have Real Effects on Political Attitudes?” The International Journal of Press/Politics 26, no. 1 (January 2021): 69-91. Do (Microtargeted) Deepfakes Have Real Effects on Political Attitudes? org/10.1177/1940161220944364. |

| ↑15 | Vaccari, Cristian, and Andrew Chadwick. “Deepfakes and Disinformation: Exploring the Impact of Synthetic Political Video on Deception, Uncertainty, and Trust in News.” Social Media + Society (January 2020). https://doi.org/10.1177/2056305120903408.) |

| ↑16 | ” How Much Data Is Created Every Day in 2020?“, March 18, 2021 |

| ↑17 | in Germany, deepfakes fall under common law. In the United States, some states have passed specific legislation such as California or Texas. Bill S. 3805 was introduced in the Senate on December 21, 2018, to regulate deepfakes at the federal level. Other countries such as New Zealand, Korea, or England have passed legislation or made bills in this sense as well |

| ↑18 | and this is not reserved to synthetic media |